MEXaction2: action detection and localization dataset

Goal

Human actions contain relevant information regarding the cultural content of video archives for research, educational or entertainment purposes. The aim of this "MEXaction2" dataset is to support the development and evaluation of methods for 'spotting' instances of short actions in a relatively large video database. For each action class, such a method should detect instances of this class in the video database and output the temporal boundaries of these detections, with an associated 'confidence' score. This task can also be seen as 'action retrieval': the 'query' is an action class and the results are instances of the class, ordered by decreasing 'confidence' score.

Dataset description

The dataset contains videos from three sources:

- INA videos. A large collection of 117 videos (for a total of 77 hours), was extracted from the archives of the Institut National de l'Audiovisuel (France). It contains videos produced between 1945 and 2011, digitized from film to 512x384 digital video encoded using MPEG-4 h264 AVC compression. Even though there are many instances of the actions to spot, these instances are relatively rare in this video collection, i.e. the total duration of the instances is less than 3% of the total duration of the videos. The video content in this collection was divided into three parts: training, parameter validation and testing.

- YouTube clips. From additional videos collected from YouTube, we provide 588 short clips, each containing only one instance of an action to spot (everything within the boundaries of a clip belongs to an action instance). All these instances should be only used for training.

- UCF101 Horse Riding clips. We add as training instances for the HorseRiding class the Horse Riding clips from the UCF101 dataset. All these instances should be only used for training.

There are two annotated actions, described and illustrated below:

- BullChargeCape: in the context of a bull fight, the bull charges the matador who dangles a cape to distract the animal. The actions were annotated to include the bull's charge, the movement of the cape and the feint of the matador. The definition is strict: for example, sequences showing a bull charging alone, when a matador is not present, or when a matador does not dangle a cape, are not instances of this class. Instances are, on average, 1 second long and duration variance is rather low.

- HorseRiding: instances of one or several persons riding horses. Some instances are during bull fights. The definition is strict: for example, a horse or many horses running without rider(s) are not instances of the class. Also, a horse and rider that are not moving are not an instance of the class either. Instances are between 0.5 and 10 seconds long (duration variance is high).

The total number of annotated examples is high. The number of instances for training, validation and test is given in the next table:

| Action | Nb. training instances | Nb. validation instances | Nb. test instances |

| BullChargeCape | 917 | 217 | 190 |

| HorseRiding | 419 | 93 | 139 |

Beside the fact that the total amount of annotated video is relatively large compared to other existing datasets, this dataset is also interesting because it raises several difficulties:

- High imbalance between non-relevant video sequences and relevant ones (instances of an action of interest).

- High variability in point of view, background movement and action duration for HorseRiding.

- Variability in image quality: old videos have lower resolution and are in black and white, while the newest ones are in HD.

Access to the annotated dataset

INA videos

This part of the Mex dataset can be made available after registering (step 1 below) and accepting all the conditions specified in the conditions of use you will receive from INA. Please note that registration is restricted to legal entities having a research department or a research activity.

Step 1: please first register by sending an e-mail to dataset (at) ina.fr. You will receive a user/password combination by e-mail once your registration is validated.

Step 2: the files are available through Secure FTP at ftps://ftp-dataset.ina.fr:2122

For any difficulty with the access to the INA servers please contact dataset (at) ina.fr

This part of the Mex dataset is intended for finalizing, experimenting with and evaluating search and analysis tools for multimedia content, strictly as part of a scientific research activity.

YouTube clips

Mexican TV videos, catalogued by the INAH, INALI, Fonoteca Nacional (Mexico) and others, were downloaded from the YouTube web channels of some Mexican organisms and converted to MP4 format. From each video, only clips containing training examples of the actions of interest were retained. This dataset is intended for scientific research only. The set of clips can be downloaded here.

UCF101 Horse Riding clips

Are added as training instances for the HorseRiding class the Horse Riding clips from the UCF101 dataset. Please comply with the respective terms of use of the UCF101 dataset.

Evaluation

Action detection and localization is evaluated here as a retrieval problem: the system must produce a list of detections (temporal boundaries) with positive scores. Sorting these results by decreasing score allows to obtain precision/recall curves and to compute the Average Precision (AP) in order to characterize the detection performance.

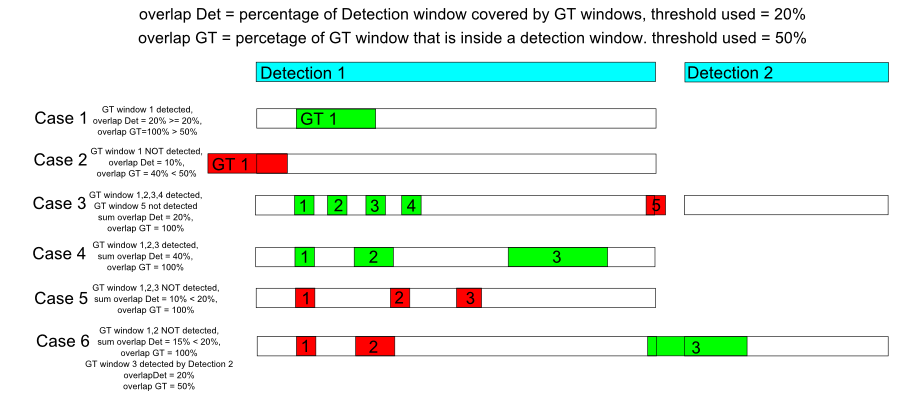

Definition of positive and negative detections: for the Mex dataset the actions are rare but often occur in clusters in which they are separated by relatively few frames (actions appear successively in a chain). Thus, a detection window is likely to cover several ground truth (GT) annotations. We adopt an evaluation criterion that takes this into account. GT annotations Bi are marked as detected if they are at least 50% inside a detection window A:

| A ∩ Bi | ⁄ | Bi | > 0.5 (overlap Detection)

and cover at least 20% of the time span of the detection window:

∑i | A ∩ Bi | ⁄ | A | > 0.2 (overlap GT)

In the figure above,

- Detection windows (as provided by your system) are in CYAN

- Ground truth windows that are considered 'detected' are in GREEN

- Ground truth windows that are considered 'not detected' are in RED

Baseline results

To support comparisons, we provide below the results obtained by an action localization system using:

- Dense Trajectory features (ref. [1], with this implementation), pooled over 30-frame windows.

- Bag of Visual Words to represent the statistical distribution of these features over the windows (K=4000 clusters, descriptor size was 396).

- SVM with Histogram Intersection kernel for detection (with LibSVM implementation), the C parameter being selected by using the parameter validation set.

- Average Precision is computed as described in the evaluation section above; we average it over 3 different sets of negative training examples.

| Action | Average AP |

| BullChargeCape | 0.5026 |

| HorseRiding | 0.4076 |

Contributors

Contact: if needed, you can contact us at andrei dot stoian at gmail dot com and michel dot crucianu at cnam dot fr. Please note that we can only provide limited support.

Citation

If you refer to this dataset, please call it "MEXaction2" and cite this web page http://mexculture.cnam.fr/xwiki/bin/view/Datasets/Mex+action+dataset

The INA part of the dataset, called "MEXaction", with a more challenging partitioning between training / validation / test, was employed in the following publications:

Stoian, A., Ferecatu, M., Benois-Pineau, J., Crucianu, M. Fast action localization in large scale video archives. IEEE Transactions on Circuits and Systems for Video Technology (IEEE TCSVT), DOI 10.1109/TCSVT.2015.2475835.

Stoian, A., Ferecatu, M., Benois-Pineau, J., Crucianu, M. Scalable action localization with kernel-space hashing, IEEE International Conference on Image Processing (ICIP), Québec, Canada, Sept. 27-30, 2015.

References

[1] H. Wang, A. Kläser, C. Schmid, and C.L. Liu. Dense trajectories and motion boundary descriptors for action recognition. International Journal of Computer Vision, 103(1): 60-79, May 2013.